In the world of Generative AI, building a functional workflow is just the beginning. The real challenge lies in optimization. The search space for tuning a modern AI workflow is astronomically large. Consider a simple workflow with four tunable steps and three tuning methods, each with just four options. This results in a staggering (4^3)^4, or over 16 million, possible configurations. Searching this space with a brute-force approach would cost around $168,000 and take several weeks. In contrast, Cognify can solve this exact problem in 24 minutes for just $5. In this post, we're pulling back the curtain on its core technology that makes this possible: AdaSeek, our novel adaptive search algorithm.

How AdaSeek Works: A Principled Approach

AdaSeek is an intelligent search algorithm designed to efficiently navigate the vast, complex space of possible AI workflow configurations. Its power comes from a design philosophy that balances efficiency, coverage, and budget awareness. Let's break it down.

1. [Result-Driven] Evaluation-Based Iterative Search

AdaSeek operates on a simple but powerful principle: learn from every result. Each time a workflow configuration is tested, its metrics scores are fed back into the system. This creates an iterative loop where every trial makes the search smarter. Unlike static methods, AdaSeek continuously refines its understanding of the search space, allowing it to progressively focus on more promising areas.

2. [Efficient] Bayesian-Optimization-Based Sampling

To avoid wasting time on subpar configurations, AdaSeek employs a sophisticated sampling strategy based on Bayesian Optimization. Instead of picking new configurations at random, it builds a probabilistic model of the search space based on past search results. This model determines which untested configurations are most likely to yield high scores. By selecting the next candidate to test based on past experience, AdaSeek dramatically improves search efficiency, finding better solutions with fewer trials.

3. [Coverage] Hierarchical Search

The configurations and knobs that can be tuned for modern AI workflows have different impacts: high-level decisions, like determining steps in a workflow, have a massive impact, while low-level tweaks, like changing a word in a prompt, offer finer-grained control. AdaSeek leverages this insight to build a hierarchical search with three layers: workflow structure, model and tool selection, and prompt tuning. It chooses a workflow structure first and then drills down to model/tool selection and finally prompt tuning, ensuring comprehensive coverage without getting lost in the weeds.

4. [Budget-Aware] Adaptive Search Budget Allocation

Every optimization run operates within a budget, whether it's time or money. AdaSeek is designed to maximize results within these constraints. It adaptively allocates its search budget, dedicating more trials to the most promising configurations (exploitation) while still investing a portion of its budget in exploring new, uncertain areas (exploration). This dynamic allocation ensures that your budget is spent where it matters most, delivering the highest possible return on investment.

Figure 1: AdaSeek Illustration. This example illustrates how AdaSeek spends a search budget of 32 iterations to efficiently navigate through the search of a simple workflow with two architecture variations. Each color represents a step cog variation (e.g., a different model or a rewritten code piece); each symbol represents a different weight (e.g., a system prompt or a set of few-shot examples). Search budgets are gradually directed to the configurations that are more fruitful (the first step cog configuration), and best results continue to be updated. The second architecture's search is omitted.

Evaluation Results

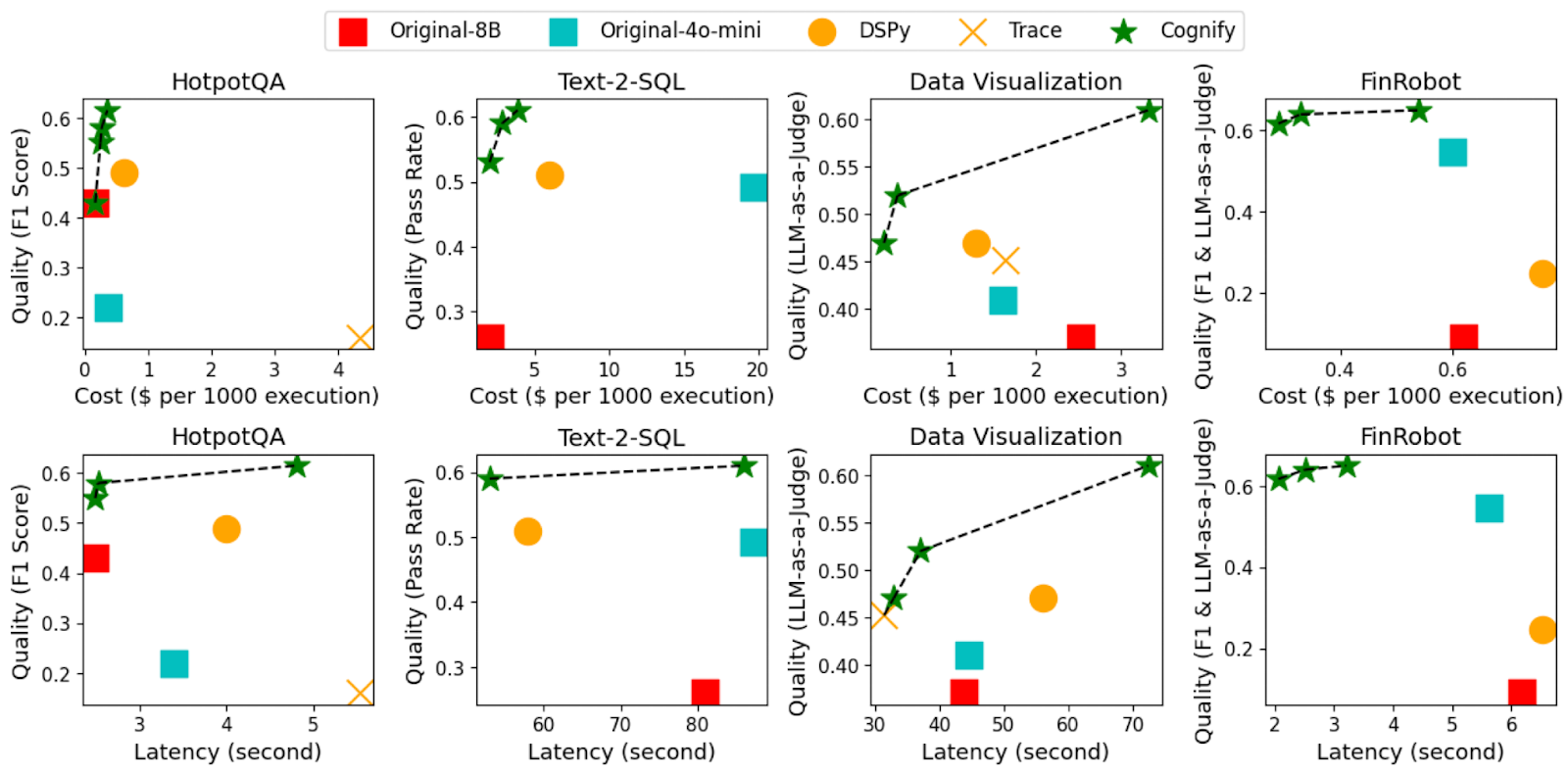

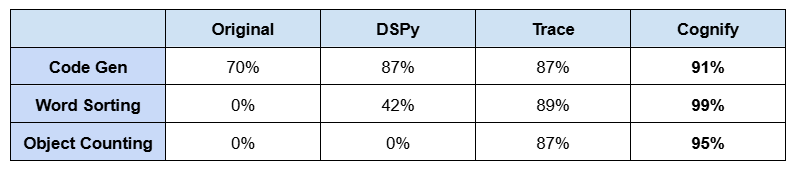

Now, let's look at the evaluation of Cognify with six representative gen-AI workflows (HotpotQA, Text-to-SQL, Data Visualization, Financial Analysis, Code Generation, and BIG-bench). As shown below, Cognify's improved generation quality by up to 2.8x, cost cuts by 10x, and latency reduction by 2.7x compared to original expert-written workflows. Cognify outperforms DSPy and Microsoft Trace with up to 2.6x higher generation quality, up to 10x cost reduction, and up to 3x latency reduction.

Figure 2: Cognify's Optimized Generation Quality and Cost/Latency. Dashed lines show the Pareto frontier (upper left is better). Cost shown as model API dollar cost for every 1000 requests. Cognify selects models from GPT-4o-mini and Llama-8B. DSPy and Trace do not support model selection and are given GPT-4o-mini for all steps. Trace results for Text-2-SQL and FinRobot have 0 quality and are not included.

Figure 3: Accuracy Comparison of Additional Workloads. Code Generation from HumanEval, Word Sorting and Objective Counting from BIG-bench.

A New Paradigm for AI Optimization

AdaSeek is more than just a clever algorithm; it represents a fundamental shift in how we approach AI development. By replacing manual guesswork with efficient, automated, and adaptive search, Cognify empowers teams to build higher-quality AI products faster and more reliably than ever before.